This is an old revision of the document!

Table of Contents

Instalamos servidor en RAID:

http://matarosensefils.net/wiki/index.php?n=Proxmox.DebianJessieNetinstall

En resumen, en cada disco creo 3 particiones: 32 Gb / y RAID 4 Gb swap Lo que sobre RAID

Y hacemos dos RAIDS el de / con opción boot

Cuando instalo se queda el segundo RAID (el de datos) así:

# cat /proc/mdstat md1 : active (auto-read-only) raid1 sdb3[1] sda3[0] resync=PENDING

Para forzar resync:

# mdadm --readwrite /dev/md1

Montamos el segundo RAID como datos. Miramos el UUID:

# blkid /dev/sda1: UUID="d89395a1-068d-16f8-09ed-4e793da49013" UUID_SUB="98103b71-f3c7-3c72-d885-4553efb5ffed" LABEL="(none):0" TYPE="linux_raid_member" PARTUUID="0004fd66-01" /dev/sda2: UUID="4c6564a2-4033-4759-9195-37c8936a60b3" TYPE="swap" PARTUUID="0004fd66-02" /dev/sda3: UUID="c53730c2-2f9d-9b3d-843e-468522d1b24b" UUID_SUB="c93afabd-f1e6-2813-35d4-710b8a14eb35" LABEL="(none):1" TYPE="linux_raid_member" PARTUUID="0004fd66-03" /dev/sdb1: UUID="d89395a1-068d-16f8-09ed-4e793da49013" UUID_SUB="71ed0974-d4b4-ddb4-5f20-7ed057c143cc" LABEL="(none):0" TYPE="linux_raid_member" PARTUUID="0005e1aa-01" /dev/sdb2: UUID="022d3f83-3481-47e7-8f72-3754a223924e" TYPE="swap" PARTUUID="0005e1aa-02" /dev/sdb3: UUID="c53730c2-2f9d-9b3d-843e-468522d1b24b" UUID_SUB="e92d76ad-f615-464c-b8ae-b0b1783a8978" LABEL="(none):1" TYPE="linux_raid_member" PARTUUID="0005e1aa-03" /dev/md0: UUID="21d2ef5b-d091-465e-b72f-c1368b94a144" TYPE="ext4" /dev/md1: UUID="c3e9ffe8-fb24-405d-abf9-885f201d68c4" TYPE="ext4"

Añadimos en el fstab:

UUID=c3e9ffe8-fb24-405d-abf9-885f201d68c4 /datos ext4 errors=remount-ro 0 1

Instalación Proxmox

Fuente: http://pve.proxmox.com/wiki/Install_Proxmox_VE_on_Debian_Jessie#Adapt_your_sources.list

Asegurarse que el valor que resuelva hostname lo tenga en el /etc/hosts. Por ejemplo:

127.0.0.1 localhost 192.168.1.100 proxmoxescorxador

Añadimos repositorios de proxmox:

echo "deb http://download.proxmox.com/debian jessie pvetest" > /etc/apt/sources.list.d/pve-install-repo.list

wget -O- "http://download.proxmox.com/debian/key.asc" | apt-key add -

apt-get update && apt-get dist-upgrade

apt-get install proxmox-ve ntp ssh postfix ksm-control-daemon open-iscsi

Vemos que cambia el kernel al reiniciar:

Linux proxmox02 3.16.0-4-amd64 #1 SMP Debian 3.16.7-ckt11-1+deb8u3 (2015-08-04) x86_64 GNU/Linux Linux proxmox02 4.1.3-1-pve #1 SMP Thu Jul 30 08:54:37 CEST 2015 x86_64 GNU/Linux

Configuramos la red así:

auto vmbr0 iface vmbr0 inet static address 192.168.1.100 netmask 255.255.255.0 gateway 192.168.1.1 bridge_ports eth0 bridge_stp off bridge_fd 0

Cluster Proxmox

Desde el primer nodo que será master

root@proxmox01:/gluster# pvecm create clusterproxmox

Corosync Cluster Engine Authentication key generator.

Gathering 1024 bits for key from /dev/urandom.

Writing corosync key to /etc/corosync/authkey.

root@proxmox01:/gluster# pvecm status

Quorum information

------------------

Date: Tue Aug 11 23:23:53 2015

Quorum provider: corosync_votequorum

Nodes: 1

Node ID: 0x00000001

Ring ID: 4

Quorate: Yes

Votequorum information

----------------------

Expected votes: 1

Highest expected: 1

Total votes: 1

Quorum: 1

Flags: Quorate

Membership information

----------------------

Nodeid Votes Name

0x00000001 1 192.168.1.4 (local)

Desde el segundo nodo

root@proxmox02:/mnt# pvecm add 192.168.1.4 The authenticity of host '192.168.1.4 (192.168.1.4)' can't be established. ECDSA key fingerprint is 8a:88:8a:2a:d2:8f:96:62:c1:85:ab:fc:c7:23:00:11. Are you sure you want to continue connecting (yes/no)? yes root@192.168.1.4's password: copy corosync auth key stopping pve-cluster service backup old database waiting for quorum...OK generating node certificates merge known_hosts file restart services successfully added node 'proxmox02' to cluster.

Borrar nodo cluster

Si al borrar un nodo da error, le decimos que espere (e=expected) solo un nodo:

root@proxmox01:/var/log# pvecm delnode proxmox02 cluster not ready - no quorum?

root@proxmox01:/var/log# pvecm e 1 root@proxmox01:/var/log# pvecm delnode proxmox02

Gluster:

Instalamos versión 3.7 que es la estable:

http://download.gluster.org/pub/gluster/glusterfs/3.7/LATEST/Debian/jessie/

Instalamos:

wget -O - http://download.gluster.org/pub/gluster/glusterfs/LATEST/rsa.pub | apt-key add - echo deb http://download.gluster.org/pub/gluster/glusterfs/LATEST/Debian/jessie/apt jessie main > /etc/apt/sources.list.d/gluster.list apt-get update apt-get install glusterfs-server

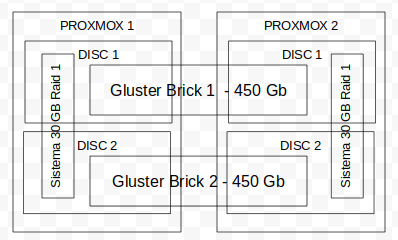

Queremos montar lo siguiente:

En el /etc/hosts añadimos los dos servidores:

root@proxmox1:~# cat /etc/hosts 127.0.0.1 localhost 192.168.2.1 proxmox1 192.168.2.2 proxmox2

Conectamos los dos servidores. Desde el server1:

root@proxmox1:~# gluster peer probe proxmox2

Vemos que están conectados:

root@proxmox1:~# gluster peer status Number of Peers: 1 Hostname: proxmox2 Uuid: 62eecf86-2e71-4487-ac5b-9b5f16dc0382 State: Peer in Cluster (Connected)

Y desde el server2 igual

root@proxmox2:~# gluster peer status Number of Peers: 1 Hostname: proxmox1 Uuid: 061807e7-75a6-4636-adde-e9fef4cfa3ec State: Peer in Cluster (Connected)

Creamos las particiones en xfs:

root@proxmox1:~# mkfs.xfs -f -b size=512 /dev/sda3

meta-data=/dev/sda3 isize=256 agcount=4, agsize=217619456 blks

= sectsz=512 attr=2, projid32bit=1

= crc=0 finobt=0

data = bsize=512 blocks=870477824, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=0

log =internal log bsize=512 blocks=425038, version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

root@proxmox1:~# mkfs.xfs -f -b size=512 /dev/sdb3

meta-data=/dev/sdb3 isize=256 agcount=4, agsize=217619456 blks

= sectsz=512 attr=2, projid32bit=1

= crc=0 finobt=0

data = bsize=512 blocks=870477824, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=0

log =internal log bsize=512 blocks=425038, version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

Montamos las particiones en /gluster/brick1 y /gluster/brick2

# blkid

/dev/sda3: UUID="6afd599f-ea83-4c19-bc71-8ebfce42a332" TYPE="xfs" /dev/sdb3: UUID="bd39fa7a-6b23-4b43-89e0-693b61ba4581" TYPE="xfs"

Fichero /etc/fstab

#brick 1 UUID=“6afd599f-ea83-4c19-bc71-8ebfce42a332” /glusterfs/brick1 xfs rw,inode64,noatime,nouuid 0 1

#brick 2 UUID=“bd39fa7a-6b23-4b43-89e0-693b61ba4581” /glusterfs/brick2 xfs rw,inode64,noatime,nouuid 0 1

Creamos los volumenes:

root@proxmox1:~# gluster volume create volumen_gluster1 replica 2 transport tcp proxmox1:/glusterfs/brick1 proxmox2:/glusterfs/brick1 force volume create: volumen_gluster1: success: please start the volume to access data

root@proxmox1:~# gluster volume create volumen_gluster2 replica 2 transport tcp proxmox1:/glusterfs/brick2 proxmox2:/glusterfs/brick2 force volume create: volumen_gluster2: success: please start the volume to access data

Los iniciamos:

root@proxmox1:~# gluster volume start volumen_gluster1 volume start: volumen_gluster1: success root@proxmox1:~# gluster volume start volumen_gluster2 volume start: volumen_gluster2: success

Miramos el estado:

root@proxmox1:~# gluster volume status Status of volume: volumen_gluster1 Gluster process TCP Port RDMA Port Online Pid ------------------------------------------------------------------------------ Brick proxmox1:/glusterfs/brick1 49152 0 Y 20659 Brick proxmox2:/glusterfs/brick1 49152 0 Y 2388 Self-heal Daemon on localhost N/A N/A Y 20726 Self-heal Daemon on proxmox2 N/A N/A Y 2443 Task Status of Volume volumen_gluster1 ------------------------------------------------------------------------------ There are no active volume tasks Status of volume: volumen_gluster2 Gluster process TCP Port RDMA Port Online Pid ------------------------------------------------------------------------------ Brick proxmox1:/glusterfs/brick2 49153 0 Y 20706 Brick proxmox2:/glusterfs/brick2 49153 0 Y 2423 Self-heal Daemon on localhost N/A N/A Y 20726 Self-heal Daemon on proxmox2 N/A N/A Y 2443 Task Status of Volume volumen_gluster2 ------------------------------------------------------------------------------ There are no active volume tasks

Conectar como cliente

#mount -t glusterfs proxmox01:/volumen_gluster /mnt/glusterfs

/etc/fstab

proxmox01:/volumen_gluster /mnt/glusterfs glusterfs defaults,_netdev 0 2

En un container lxc falla, hay que crear fuse a mano:

mknod /dev/fuse c 10 229